About

My research interests lie in Human-Computer Interaction (HCI), Human-Centered Computing, Human Behavior Modeling also in Transportation Informatics and Health Informatics. My goal is to bridge the gap between technological expertise and user-friendly experiences by leveraging advanced technologies and creating solutions aligned with user preferences. I am now working at Ubicomp Lab in National University of Singapore as a PhD Student with Prof. Brian lim. Before joining NUS, I spent 2 years as a Research Assistant at COOLA Lab in Southeast University, where I worked with Prof. Yan LYU and Prof. Wanyuan Wang

Publications

- CHI'26

-

Editable XAI: Toward Bidirectional Human-AI Alignment with Co-Editable Explanations of Interpretable Attributes

H Chen, J Bai, T Fang, B Lim

[Link] - AAAI'24

-

i-Rebalance: Personalized Vehicle Repositioning for Supply Demand Balance

H Chen, P Sun, Q Song, W Wang, W Wu, W Zhang, G Gao, Y Lyu

[Link] - AIAHPC'22

Research Projects

- Editable XAI: Toward Bidirectional Human-AI Alignment with Co-Editable Explanations of Interpretable Attributes

-

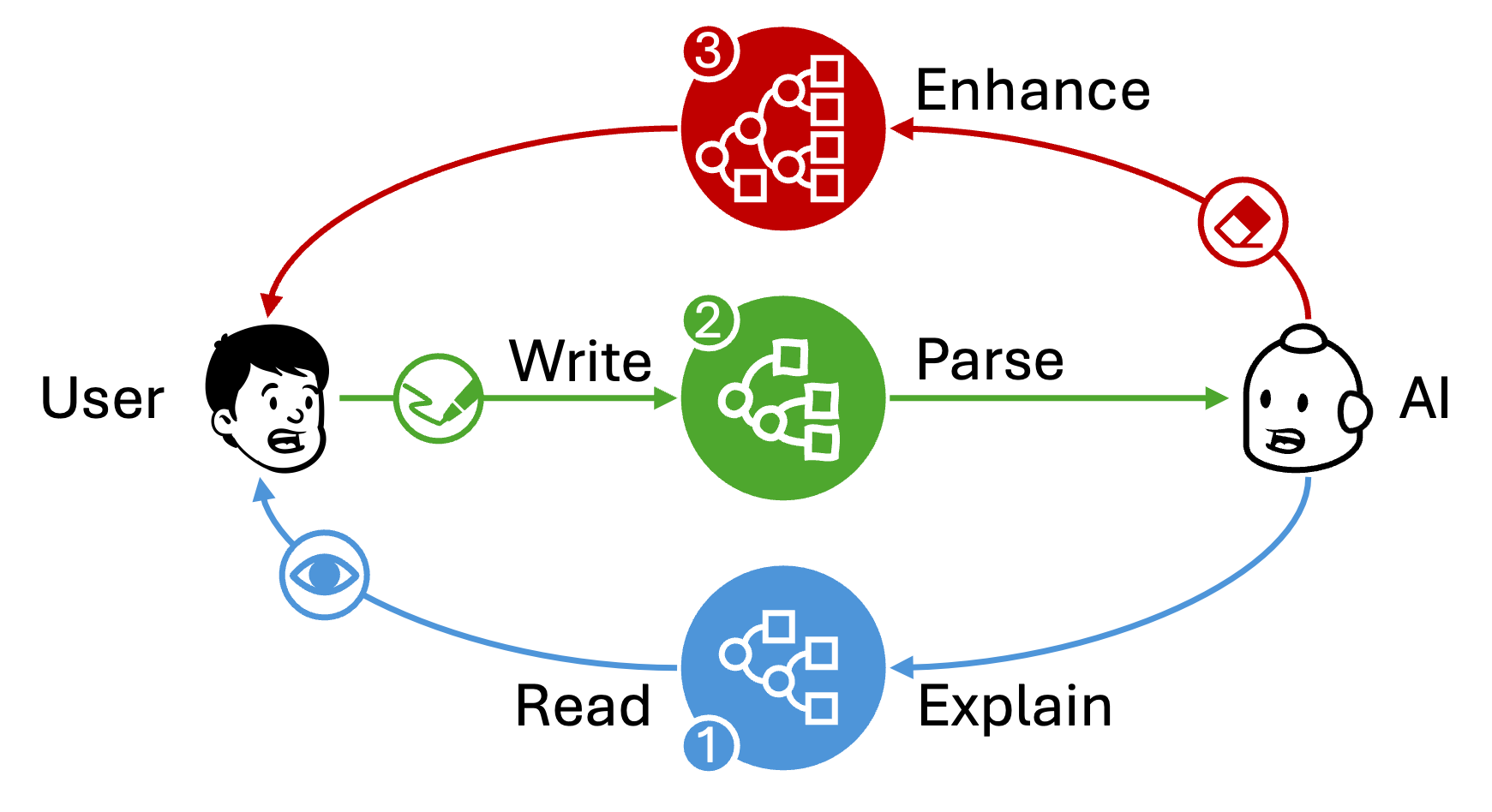

Overview:

While Explainable AI (XAI) helps users understand AI decisions, misalignment in domain knowledge can lead to disagreement. This inconsistency hinders understanding, and because explanations are often read-only, users lack the control to improve alignment. We propose making XAI editable, allowing users to write rules to improve control and gain deeper understanding through the generation effect of active learning. We developed CoExplain, leveraging a neural network for universal representation and symbolic rules for intuitive reasoning on interpretable attributes. CoExplain explains the neural network with a faithful proxy decision tree, parses user-written rules as an equivalent neural network graph, and collaboratively optimizes the decision tree. In a user study (N=43), CoExplain and manually editable XAI improved user understanding and model alignment compared to read-only XAI. CoExplain was easier to use with fewer edits and less time. This work contributes Editable XAI for bidirectional AI alignment, improving understanding and control.

- Modularized Interpretable Medical Decision Support System with Visual Programming

-

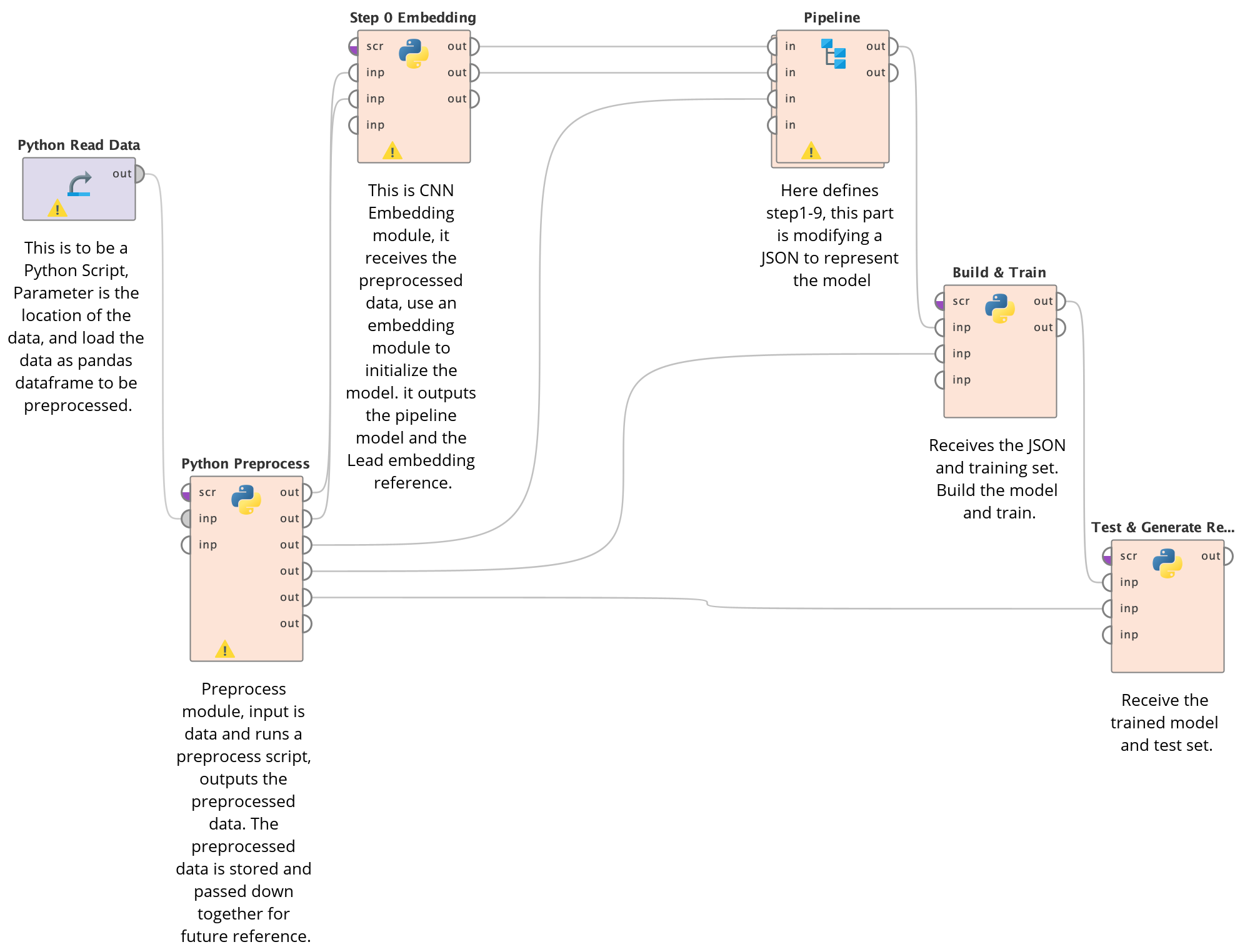

Overview:

Current medical decision support systems (MDSS) provides fixed guidlines to doctors while each doctor has a unique way of diagnose, e.g. thresholds of indexes, sequence of examinations. Existing medical prediction tools also offer poor interpretability which is confusing to doctors. This project focuses on enabling doctors to build diagnostic models with their individual preference. With our toolkit, doctors can use visual programming to customize a prediction model that is highly interpretable and precise in practice.

- i-Rebalance: Personalized Vehicle Repositioning for Supply Demand Balance

-

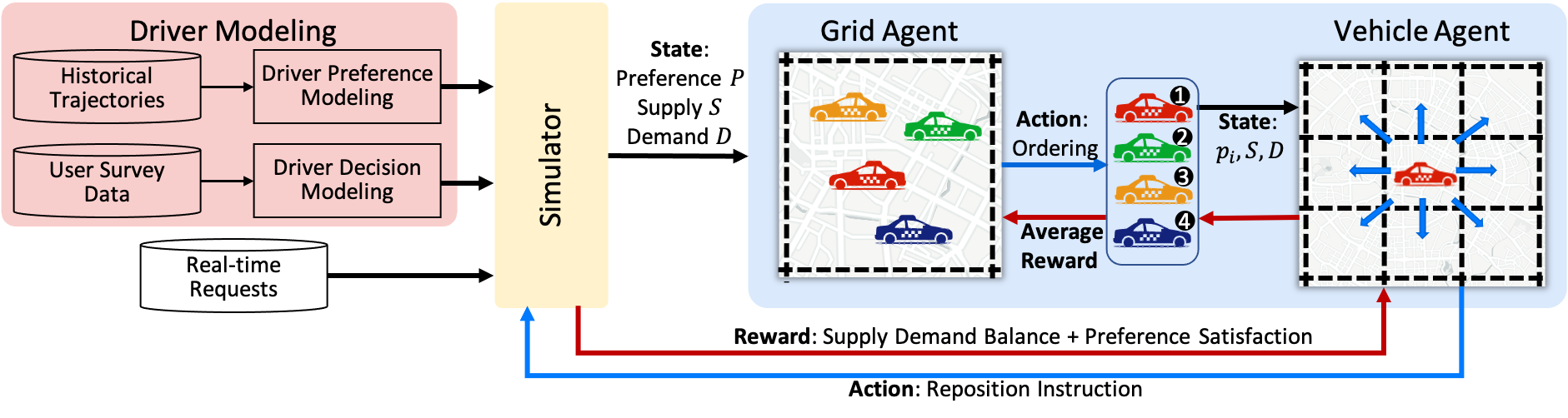

Overview:

Overview:

Traditional vehicle reposition techniques used in ride-hailing contexts turn out to have little effect due to their ignorance on drivers' preference and response behaviors. This project first demonstrates that drivers do have personalized cruising preferences and carried out experiments to prove it necessary to consider drivers' preference when repositioning. Our on-field User Study of 106 professional drivers further stressed that drivers do have preference and their preference is a key factor in their decision making process of whether to accept a reposition or not. Made up with three key modules: an LSTM predictor of drivers' preferences, a decision model on drivers and a dual-agent DRL framework, our solution can both satisfy driver preferences and demand-supply gap. [GitHub Repo]

- Ear Motion Tracking System for VR Devices

-

Overview:

Virtual Reality is trending, while there are devices like Xbox Adaptive Controller to provide accessibility for the people in need, they are still hard to use in VR context because people cannot see through a VR Headset. When people have to click a button, they can hardly find it without seeing it. This project detects ear motion and uses it as an input to the VR devices as a replacement of traditional controllers to provide accessibility to the people with special needs. Our user study on 15 volunteers showed that this method is effective and easy to use. This device gives everyone the access to control a VR device, even if they are not good at moving their ears.

[GitHub Repo]

Side Projects

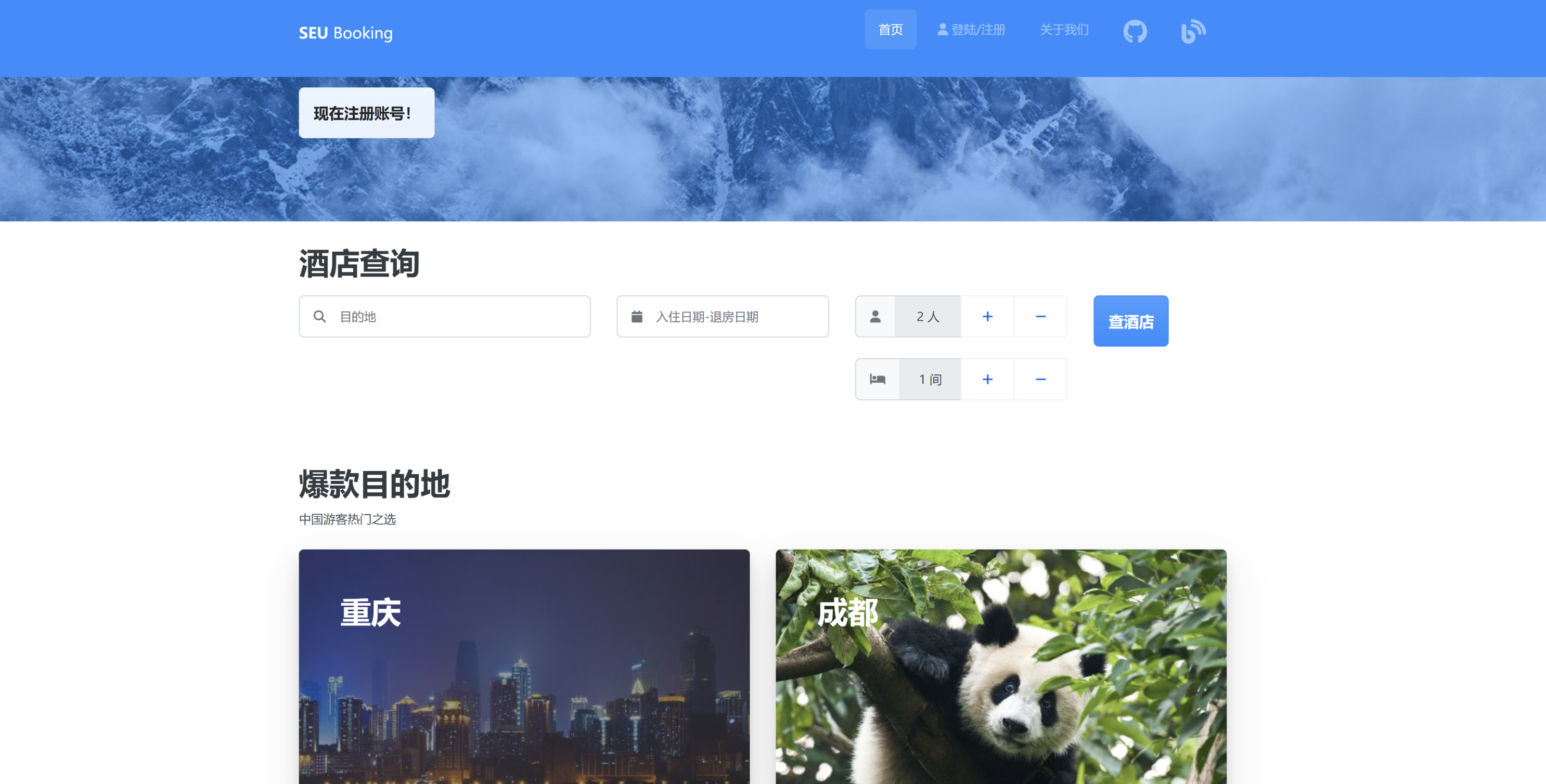

- C-H-ina: An Online Hotel Searching Service

-

Overview:

This project aims at building an online hotel searching website to provide access to hotels all over China. We crawled data and pictures of over 10,000+ hotels from booking.com. This website was built using SSM framework (Spring, Spring MVC, Mybatis) and has been on service for a semester.

- Industrial Big-Data Inspection System

-

Overview:

IoT devices are widely used in industries like chemical and electricity, while they provide real-time monitoring of the facilities, they requires inspection as well. This system targets at providing convenient inspection system for factories, empowered with a dashboard and a InfloxDB time-series database driven anomaly detection algorithm.

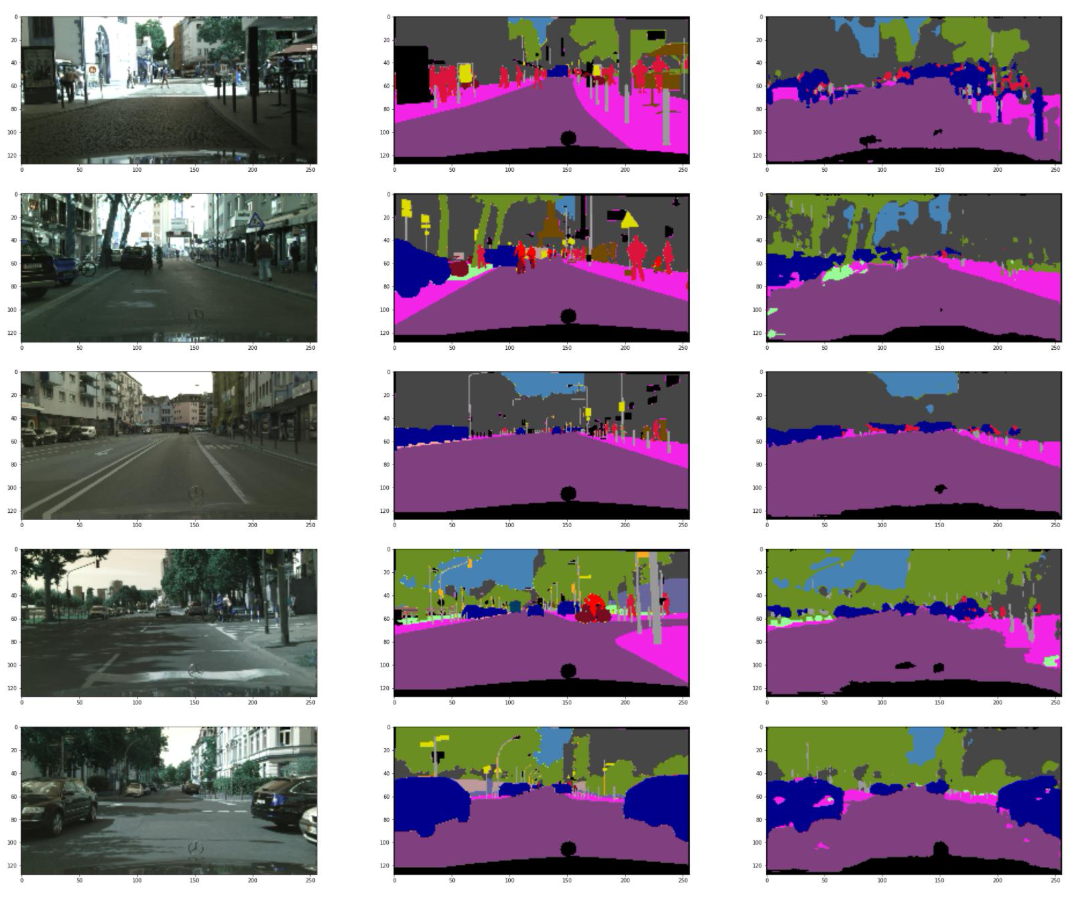

- Semantic Segmentation on CityScapes Dataset

-

Overview:

Semantic Segmentation is an essential technique used in autonomous driving. It helps the computer to distinguish different objects captured by the camera, making further decisions possible. We trained a U-net semantic segmentation model on CityScape Dataset with an accuracy of 76.75%.

Haoyang Chen

PhD Student

School of Computing

National University of Singapore